Summary: ChatGPT, suffered a significant error that allowed some users to see the titles of other users’ conversations. The issue was caused by a bug in an open-source library, which shared brief descriptive titles of conversations but not the full transcripts. The glitch was initially highlighted by a Reddit user who posted a photo showing descriptions of ChatGPT conversations that weren’t theirs, and a Twitter user also shared a screenshot of the same bug.

OpenAI’s popular AI chatbot, ChatGPT, experienced a technical glitch that leaked the titles of some users’ conversations and allowed a small number of users to see the titles – but not the content – of conversations between the generative artificial intelligence (AI) tool and other users. The chat history feature of ChatGPT had been offline for a few days after a bug in an open-source library caused brief descriptions of other users’ conversations to be exposed on the service earlier this week.

Since its launch in November 2022, millions of people have used ChatGPT for various purposes, from drafting messages to coding. Each conversation is stored in the user’s chat history bar for later reference. However, on Monday, some users began seeing conversations in their history that they claimed they hadn’t had with the chatbot.

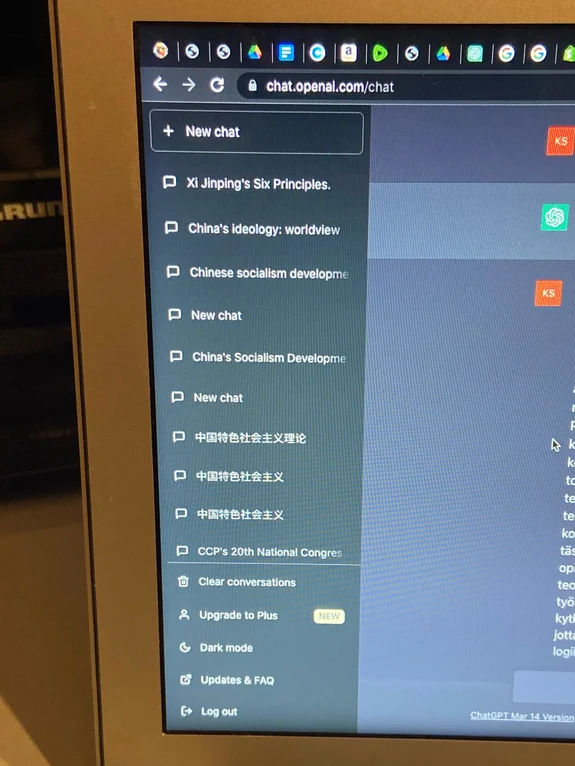

This was first reported in a Reddit thread, featuring a snapshot that displayed various conversations on the screen that did not belong to the author of the post. As a result, the post’s author expressed concern about the possibility of ChatGPT being hacked. Interestingly, most of the conversations on the screen were related to China’s development under the socialist rule with titles like “Chinese Socialism Development” and conversations in Mandarin, which raised suspicion about the flaw’s origin.

Another image with the same issue was posted by a Twitter user with a picture mentioning speeches about human behavior books and queries regarding preparations for film production.

Altman acknowledged the problem was caused by the bug but assured that no private user information was shared. Altman also confirmed that the fix for the library has been released and validated by OpenAI, but did not mention when users could expect to see their chat histories again. Altman confirmed to Bloomberg, OpenAI had temporarily disabled the sidebar feature through which the bug could be exploited while working on a fix.

Although the blunder didn’t lead to any data breaches, it has drawn concern from users who worry that their private information could be released through the tool. To prevent any future data breaches, an FAQ on OpenAI’s website warns ChatGPT users not to share any sensitive information in their conversations. The company clarified that it is unable to delete specific prompts from a person’s history and that conversations may be used for training. The privacy policy of OpenAI also states that user data, such as prompts and responses, may be used to continue training the model. However, that data is only used after personally identifiable information has been removed.