AI models are evolving faster than ever but inference efficiency is a major challenge. As companies grow their AI use cases, low-latency and high-throughput inference solutions are critical. Legacy inference servers were good enough in the past but can’t keep up with large models.

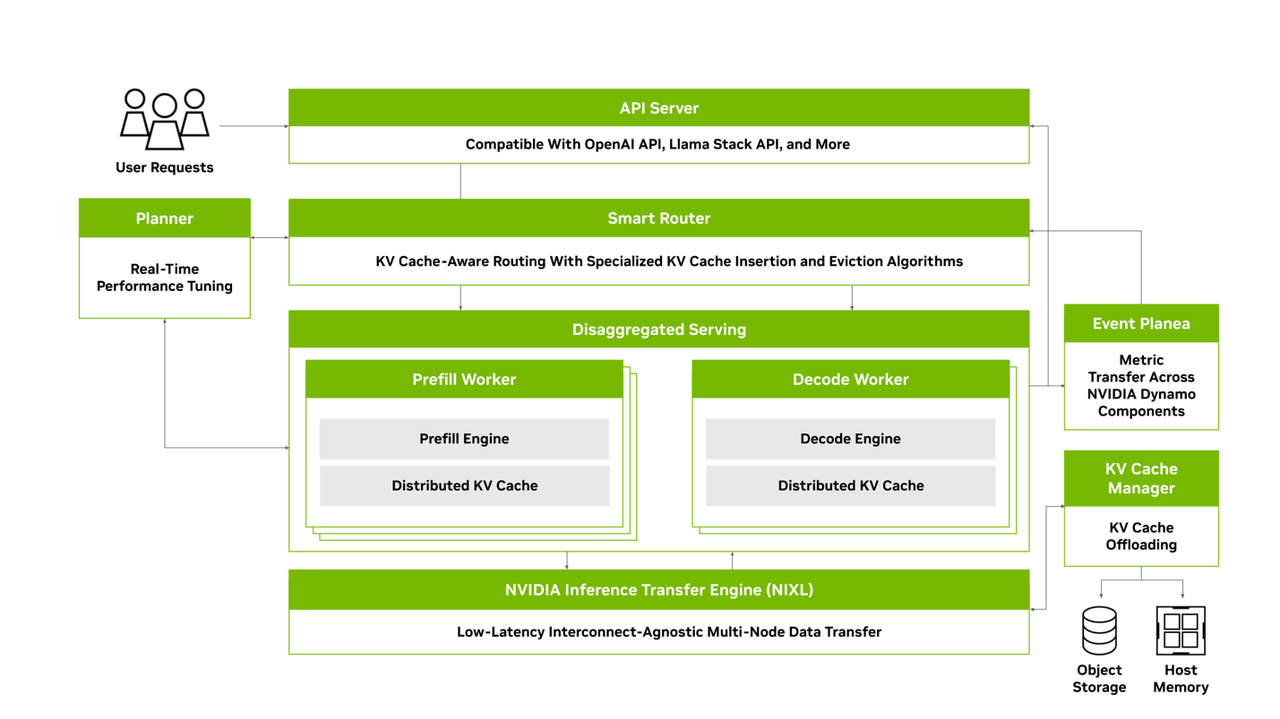

That’s where NVIDIA Dynamo comes in. Unlike traditional inference frameworks, Dynamo is designed for AI workloads, reduces latency and intelligently balances resource utilization. I have worked with various inference systems and seen firsthand how AI inference can be both complicated and expensive. Dynamo introduces dynamic GPU scheduling, disaggregated inference and intelligent request routing – a better way to manage AI inference at scale.

In this post I will go over NVIDIA Dynamo’s inference performance, the innovations and why you should consider it for your next gen AI deployments.

The Evolution of AI Inference

Historically AI inference was tied to framework specific servers which were rigid and inefficient. In 2018, NVIDIA launched Triton Inference Server which unified multiple framework specific inference implementations.

But as AI models have grown over 2000x in size new challenges have emerged:

- Large models require massive GPU compute resources

- Inefficient processing pipelines increase inference latency

- Suboptimal resource utilization across multiple inference requests

To address these challenges NVIDIA Dynamo introduces an inference first, distributed architecture that optimizes GPU utilization and reduces latency.

Key Innovations in NVIDIA Dynamo

After trying Nvidia Dynamo I found many things that differentiate it from traditional projection solutions:

1. Disaggregated Prefill and Decode Inference

Inference for large AI models suffers from uneven workload distribution where prefill (embedding reference computation) and decode (token generation) run on the same GPU causing inefficiencies.

NVIDIA Dynamo solves this by separating these tasks across different GPUs so they can be executed in parallel.

Real world impact: Consider running a large LLM like DeepSeek-R1 671B. Traditionally token generation experiences latency bottlenecks due to inefficient GPU utilization. With Dynamo prefill and decode tasks are executed on separate GPUs, big response time improvement.

2. GPU Scheduling

Static GPU allocation creates bottlenecks in AI inference. If an LLM processes multiple queries at the same time, some GPUs will be idle while others are saturated.

Dynamo monitors real-time GPU utilization and dynamically allocates resources so you get the best compute usage.

Use Case: This is especially useful in real-time recommendation systems and chatbots where the demand fluctuates. Instead of over-provisioning the hardware (which is expensive), Dynamo adjusts the GPU workload dynamically to maximize efficiency.

3. LLM-Aware Request Routing

Traditional inference servers use generic load balancing and treat all incoming requests equally. But different AI models have different compute requirements.

Dynamo introduces LLM-aware request routing which classifies requests based on:

- Model complexity

- Compute requirements

- Latency constraints

Why it matters: If an AI system has both a chatbot and a multimodal recommendation engine, Dynamo routes requests dynamically so you don’t get bottlenecks and improves system-wide efficiency.

5. KV Cache Offloading for Memory Optimization

LLM inference relies on Key-Value (KV) cache storage, where previous tokens are stored to improve response time. However, KV caches can exceed GPU memory capacity, leading to performance degradation.

Dynamo provides an optimized KV cache offloading mechanism that:

- Creates additional memory space for critical inference operations

- Utilizes multiple memory hierarchies (HBM, DRAM, SSD)

- Improves token generation throughput

These innovations make NVIDIA Dynamo a breakthrough in AI inference, delivering scalability and efficiency across various deployment scenarios.

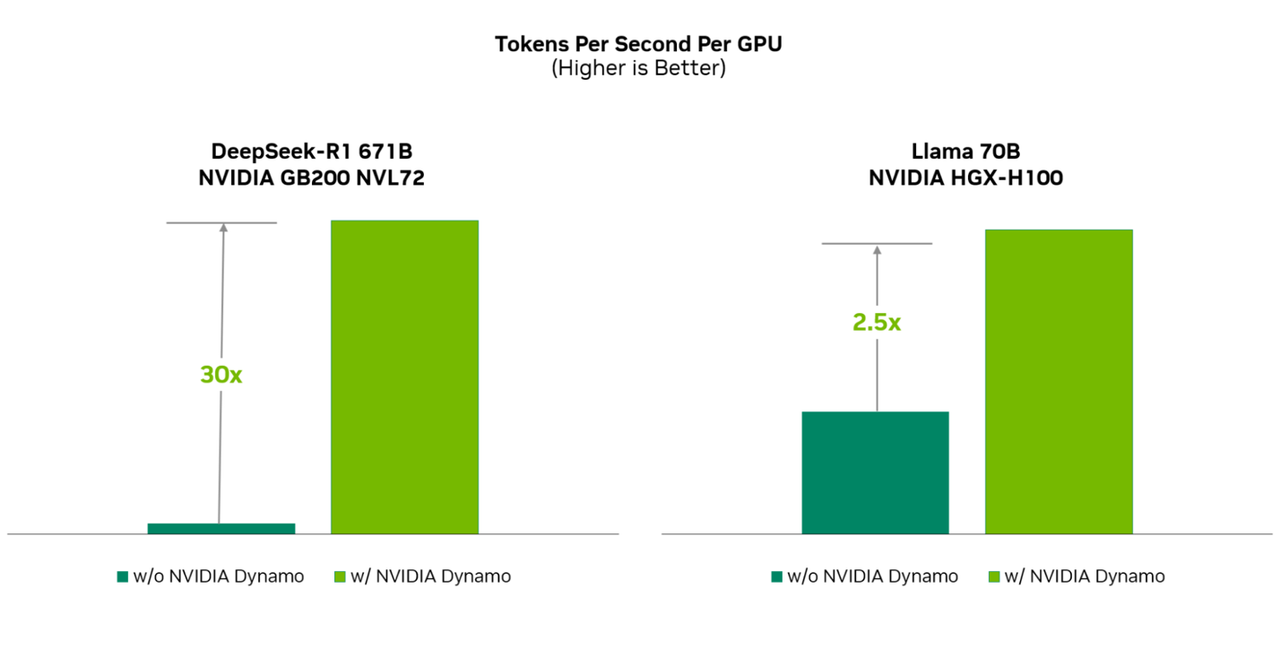

Performance Benchmarks: NVIDIA Dynamo vs. Traditional Inference

Performance is crucial, and I was eager to compare NVIDIA Dynamo against existing inference solutions. The results highlight its advantages:

Key Performance Metrics:

| AI Model | Hardware | Performance Improvement |

| DeepSeek-R1 671B | GB200 NVL72 | 30x higher tokens per second per GPU |

| Llama 70B | NVIDIA Hopper™ | 2x improvement in throughput |

These findings demonstrate so well how Dynamo can scale large AI models with lower computing cost.

Enterprise Adoption and Applications in the Real World

NVIDIA Dynamo is designed for organizations requiring scalable AI inference with minimal latency overhead.

Key Applications:

- AI-Powered Customer Service Systems

- Faster chatbot responses for improved real-time user interaction

- Optimized multi-turn conversation handling for AI agents (e.g., ChatGPT)

- Personalized Recommendations

- Reduced query response times for AI-driven recommendations

- Improved inference efficiency for e-commerce and video streaming platforms

- Autonomous Systems & Edge AI

- AI-driven robotics with optimized inference latency

- Lower power consumption for inference on edge devices

For enterprises deploying LLMs, recommendation engines, or real-time AI models, Dynamo offers an enterprise-scale inference solution.

Getting Started with NVIDIA Dynamo

Developers can deploy NVIDIA Dynamo using open-source tools available on GitHub. NVIDIA also offers enterprise-ready NIM microservices for production.

Steps to Get Started:

- Download NVIDIA Dynamo from GitHub.

- Explore end-to-end inference examples.

- Deploy AI models using Dynamo’s LLM-optimized inference backend.

- Scale enterprise workloads with NVIDIA AI Enterprise’s NIM services.

For a hands-on tutorial, visit the NVIDIA Developer Blog.

Conclusion

NVIDIA Dynamo is more than just another inference framework—it represents a paradigm shift in AI inference efficiency.

With its dynamic resource scheduling, optimized request routing, and disaggregated inference processing, Dynamo sets a new standard for scalable AI deployment.

Why it matters:

- GPU utilization is optimized for large-scale AI models

- 30x efficiency gain for generative AI workloads (Pending verification)

- Enterprise-ready inference framework for production-scale deployments

For organizations building next-generation AI applications, Dynamo is a compelling solution worth considering.