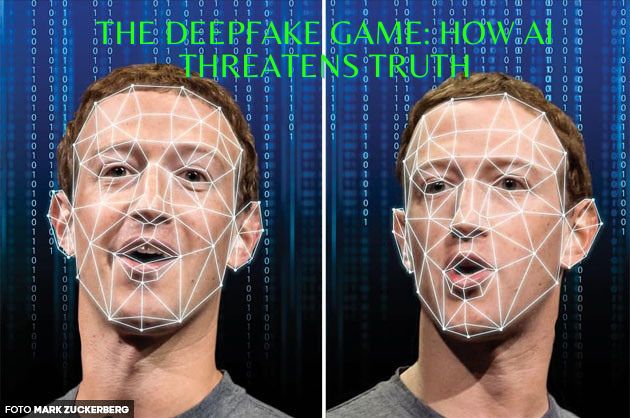

Artificial Intelligence (AI) has brought us numerous advancements in technology and has the potential to revolutionize many industries, but it also brings with it a new set of challenges and concerns One of the most pressing is the threat that AI poses to the truth. The rise of deepfake technology, in particular, has put into question what we can trust as authentic. With the advancement of deep learning techniques, deep fakes have become increasingly sophisticated, making it more challenging to differentiate between what is real and what is fake.

What are Deepfakes?

Deepfakes are manipulated media, including images, videos, and audio, that are designed to appear genuine but are in fact artificially generated. They are created using a deep learning innovation called a Generative Adversarial Network (GAN). A GAN is a type of artificial intelligence system that consists of two competing neural networks. One network is responsible for generating fake media, while the other network, trained on real data, acts as a discriminator to evaluate the authenticity of the generated media.

The two networks engage in continuous competition, which leads to the quality of the generated media improving over time. As a result, it becomes increasingly difficult for the discriminator to distinguish between real and fake media. For example, a search for “fake GAN faces” on the internet produces countless examples of high-resolution images of people who never existed but appear real.

The Deepfake Game: A Generative Adversarial Network

Generative adversarial networks (GANs) were invented by Ian Goodfellow, a University of Montreal graduate student. In 2014, Goodfellow and a friend were discussing how to build a deep-learning system that could generate high-quality images. Goodfellow proposed the concept of a GAN and, despite initial skepticism, wrote code that resulted in his first working GAN. Today, Goodfellow is considered a legend in the field of deep learning networks.

A GAN is composed of two integrated deep neural networks. The first network, the “generator,” fabricates images, while the second, trained on a set of real photographs, is called the “discriminator.” The images generated by the generator are mixed with real photos and provided to the discriminator, which evaluates each photo and decides whether it is real or fake. The two networks continuously interact in a competition, with the task of the generator being to deceive the discriminator. As the competition continues, the quality of the images increases until the system reaches an equilibrium, where the discriminator can only guess whether the analyzed image is real. The result is incredibly impressive fabricated images, some of which are so realistic that it is impossible to tell that they are fake.

The Power of Generative Adversarial Networks

GANs have many useful applications, including synthesizing images or other media files for use as training data for other systems. For example, deep neural networks for self-driving cars can be trained on images created with GANs. It has also been suggested that generated faces of non-white people be used to train facial recognition systems, thus solving the problem of racial bias in cases where it is not possible to ethically obtain a sufficient number of high-quality photographs of real people of color. In terms of voice synthesis, GANs could give speechless people a computer-generated replacement that sounds exactly like their real voice. For example, the late Stephen Hawking, who lost the ability to speak due to Amyotrophic Lateral Sclerosis (ALS), “spoke” in a synthesized voice. More recently, sufferers of ALS, such as Tim Shaw, have been able to speak in their own voice, reconstructed by deep learning networks trained on pre-illness recordings.

The Threat Posed by Deepfakes

While the potential of GANs is undeniable, it is also tempting for many tech-savvy individuals to abuse this technology. The ability to manipulate media and create deepfakes that appear genuine has the potential to spread misinformation and cause harm. Fake video clips are already available to the general public and are often created for humor or educational purposes. For example, fake videos involving celebrities such as Mark Zuckerberg saying things they wouldn’t say publicly.

The most common method of creating deepfakes is to digitally transfer the face of one person into a real video of another. According to a deepfake recognition startup called Sensity (formerly Deeptrace), there was an 84% increase in the number of deepfakes posted online in 2019, with 15,000 being posted that year. Of these deepfakes, 96% were celebrity pornographic images or videos, with the faces of famous women being combined with the bodies of porn actresses.

Celebrities such as Taylor Swift and Scarlett Johansson have been frequent targets, but with technology continuing to advance and deepfake tools becoming more readily available, almost anyone can become a victim of digital abuse.

The quality of deepfakes is rapidly improving, and the potential for these fabricated images and videos to cause harm is growing. A credible deepfake has the ability to alter the course of history, and the means to create them may soon fall into the hands of political strategists, foreign governments, or even mischievous teenagers. However, it’s not just politicians and celebrities who should be concerned. In an age of viral videos, social media defamation, and exclusionary culture, almost anyone can become the target of a deepfake that could ruin their career and life. We have already seen how viral videos of police brutality can spark widespread protests and unrest.

The dangers of deepfakes go beyond intentional harm and political manipulation. The technology also opens up practically unlimited illegal opportunities for those seeking to make a quick profit. Criminals will use deepfakes for a variety of purposes, such as financial fraud and insurance scams, as well as manipulating the securities market. A fabricated video of a CEO making a false statement or behaving strangely, for example, could cause the company’s stock to plummet. The use of deepfakes will also interfere with the functioning of the legal system, as fake media materials could be presented as evidence, putting judges and juries in the position of potentially not being able to distinguish between real and fake.

Of course, researchers are working to find solutions to the problems posed by deepfakes and adversarial attacks on artificial intelligence. Companies such as Sensity are offering software that can detect deepfakes, but it remains a constant arms race between creators of deepfakes and those working to protect against them. Ultimately, the best way to navigate this new reality of illusion may be through authentication mechanisms such as digital signatures for photos and videos. However, even this will not be a foolproof solution.

Adversarial attacks, where specifically crafted input deceives a machine learning system, are also a growing concern. These attacks can have serious consequences, such as causing self-driving cars to make mistakes. The AI research community recognizes the vulnerability posed by adversarial attacks, and researchers such as Ian Goodfellow are working on ways to make machine learning systems more secure. However, the attackers will always be one step ahead, making a truly powerful defense algorithm a challenge.

As artificial intelligence becomes more widely used, especially with the rise of the Internet of Things, security risks will become even more significant. Autonomous systems, such as unmanned trucks delivering essential supplies, will become attractive targets for cyberattacks. It is essential to invest in research to create robust AI systems and work on regulation and protection measures before critical vulnerabilities emerge. The development of artificial intelligence will come with systemic risks, including threats to critical infrastructure, the economy, and democratic institutions. Therefore, it is critical to address security risks as the most important threat in the short term.