What is data modeling? Well, Data modeling is the process of creating a data model that represents the data and its relationships within an organization. It involves identifying data requirements and designing a blueprint for creating a database that is organized, accurate, and easy to access. The data model serves as a visual representation of the data and its relationships, and it helps to ensure that data is consistent, complete, and accurate. It is a crucial step in any data analysis project, as it provides a structured and organized way to represent and understand the data. With the increasing amount of data being generated every day, data modeling has become more critical than ever.

At its core, data modeling involves the creation of a conceptual, logical, and physical data model. A conceptual data model represents the overall structure of the data, and it is usually created at the beginning of a project. A logical data model represents the relationship between the different entities in the data and helps to define the data elements and their attributes. A physical data model represents the implementation of the logical data model, taking into account the specific requirements of the technology being used.

The goal of data modeling is to create a structure that can be used to organize and manage data effectively.

Table of Contents

Introduction to Data Modeling

Data is one of the most valuable assets that any organization possesses. However, managing data effectively can be a daunting task. This is where data modeling comes into play. Data modeling is the process of creating a visual representation of data to facilitate better understanding and management of data. In this section, we will explore the fundamentals of data modeling, including key terms, different types of data models, and the benefits of data modeling.

Entities and Attributes

Before we dive into data modeling, it is essential to understand two key terms: entities and attributes. Entities are objects or concepts that are important to the organization and can be represented in data. For example, in a customer database, the entity could be a customer. Attributes are characteristics of entities that are stored in a database. For example, a customer entity could have attributes such as name, address, and phone number.

Types of Data Models

There are three primary types of data models: conceptual, logical, and physical. A conceptual data model provides an abstract view of the data without going into details about how the data is stored. A logical data model describes the data in detail and identifies how it is stored in a database. A physical data model specifies the physical implementation of the data model in a database system.

Benefits of Data Modeling

Data modeling is essential for creating software applications that are efficient, reliable, and easy to maintain. A well-designed data model ensures that the data is organized, consistent, and easy to access, which makes it easier for developers to write code that works correctly the first time.

Data modeling has many benefits, including better communication and understanding of data, improved data quality, and increased productivity. By creating a visual representation of data, stakeholders can better understand how data is related, which can lead to improved decision-making. Data modeling can also help identify data inconsistencies and redundancies, leading to improved data quality. Finally, data modeling can help increase productivity by reducing the time and effort required to manage and manipulate data.

Data Modeling Process

The data modeling process involves several steps. The first step is to identify the entities and attributes that are important to the organization. The next step is to create a conceptual data model that provides an abstract view of the data. The logical data model is then created, which describes the data in detail and identifies how it is stored in a database. Finally, the physical data model specifies the physical implementation of the data model in a database system.

What are the types of data models?

As we become increasingly reliant on technology, it’s become more and more essential to understand the different types of data models that exist. These models form the foundation of how data is organized and managed in computer systems, making them a crucial component of any data-driven business or project. In this section, we’ll take a closer look at some of the most commonly used data models and explore their strengths and weaknesses.

Hierarchical Data Model

The hierarchical data model is one of the oldest and most well-known models in computing. It’s based on a tree-like structure where each node represents a record, and each record can have multiple child nodes. This model is intuitive and easy to understand, making it popular in early computer systems. However, it’s limited in its ability to handle complex relationships between data, and changes to the structure of the model can be difficult and time-consuming.

Network Data Model

The network data model builds upon the hierarchical model by allowing each record to have multiple parent and child nodes. This model is more flexible than the hierarchical model, as it can handle more complex relationships between data. However, it’s still limited in its ability to handle large amounts of data efficiently, making it less suitable for big data applications.

Relational Data Model

The relational data model is the most widely used model in modern computing. It’s based on tables, where each row represents a record, and each column represents a field or attribute. Relationships between tables are established through keys, allowing for complex queries and efficient management of large amounts of data. This model is flexible, easy to use, and well-suited for a wide range of applications.

Object-Oriented Data Model

The object-oriented data model is based on the concept of objects, which can represent real-world entities or concepts. Each object can have properties, methods, and relationships with other objects, allowing for highly complex data structures. This model is well-suited for object-oriented programming languages like Java and C++, making it popular in software development. However, it can be difficult to use in non-programming contexts and requires specialized knowledge to implement effectively.

Document Data Model

The document data model is a relatively new model that’s gaining popularity in big data applications. It’s based on documents, which can contain a wide range of data types, including text, images, and multimedia. Each document is stored as a separate entity, allowing for highly scalable and distributed data management. This model is well-suited for applications that require flexible schema design and real-time data processing.

Data Modeling Tools and Techniques

As the world continues to generate more and more data, it’s becoming increasingly important for businesses and organizations to have effective tools and techniques for managing and analyzing that data. Data modeling is a crucial part of this process, as it allows businesses to create a visual representation of their data, and use that representation to better understand and make decisions about that data.

There are many different data modeling tools and techniques available to help with this process, each with their own strengths and weaknesses. Some of the most commonly used data modeling tools include:

Entity Relationship Diagrams (ERDs):

ERDs are a visual representation of the relationships between entities in a system. They can be used to represent complex systems with many different relationships between data elements. UML is a modeling language that can be used to represent software systems, including data structures and behavior. It is particularly useful for large, complex systems with many different components.

Unified Modeling Language (UML)

DFDs are a visual representation of the flow of data through a system. They are particularly useful for systems that involve many different processes or components. DFDs can help to identify potential bottlenecks in the system and can be used to optimize data flow.

Data Flow Diagrams (DFDs):

DFDs are a visual representation of the flow of data through a system. They are particularly useful for systems that involve many different processes or components. DFDs can help to identify potential bottlenecks in the system and can be used to optimize data flow.

No matter which data modeling tool or technique you choose, the most important thing is to ensure that it’s well-suited to the needs of your business or organization. By taking the time to carefully evaluate your data modeling needs and selecting the right tools and techniques, you can gain a better understanding of your data and use that understanding to make better decisions.

What are the entities and attributes in the data model?

Entities are the fundamental building blocks of data modeling. They represent the real-world objects, events, or concepts that we want to model in our database. For example, if we were modeling a university, we might identify entities such as students, courses, professors, and departments.

Attributes, on the other hand, are the characteristics or properties that define an entity. Continuing with our university example, attributes of the student entity might include name, ID number, and GPA, while attributes of the course entity might include course code, title, and a number of credits.

To identify entities and attributes, it’s important to have a clear understanding of the problem you’re trying to solve. Start by identifying the key components of your system, and then work to identify the entities and their associated attributes.

When identifying entities, it’s important to consider their uniqueness. Each entity should be unique and distinguishable from all others. For example, in our university example, each student should have a unique ID number that distinguishes them from all other students.

When identifying attributes, it’s important to consider their relevance to the entity. Each attribute should be directly related to the entity it describes. For example, a student’s age is not relevant to their academic record and would not be considered an attribute of the student entity.

Once you have identified your entities and attributes, the next step is to create relationships between them. Relationships describe how entities are related to one another. For example, in our university example, a student entity might have a relationship with a course entity, indicating which courses the student is enrolled in.

There are three types of relationships: one-to-one, one-to-many, and many-to-many. One-to-one relationships describe a relationship between two entities where each instance of one entity corresponds to exactly one instance of the other entity. One-to-many relationships describe a relationship between two entities where each instance of one entity corresponds to many instances of the other entity. Many-to-many relationships describe a relationship between two entities where each instance of one entity corresponds to many instances of the other entity, and vice versa.

What is normalization in data modeling?

In simple terms, normalization is the process of organizing data in a structured manner to eliminate redundancy and improve efficiency.

To understand normalization, let’s first take a look at how data is typically stored. When we store data in a database, we often use tables to organize the information. Each table contains rows and columns, where each row represents a unique record and each column represents a field within that record.

In some cases, we may find that certain fields have repeating values across multiple records. For example, consider a database of customer orders, where each record contains a customer name, address, and order details. If a customer places multiple orders, we may find that their name and address are repeated in each order record. This redundancy can lead to inefficiencies in data storage and retrieval.

This is where normalization comes in. By applying a set of rules to the data, we can eliminate redundancy and improve data organization. The process of normalization involves breaking down a table into multiple smaller tables, each with a specific purpose and containing only unique data.

The different levels of normalization are called normal forms, with the first normal form (1NF) being the most basic and the fifth normal form (5NF) being the most advanced. Let’s take a look at each of these in more detail:

First normal form (1NF):

This is the most basic level of normalization, where each table contains only atomic values (i.e., indivisible values). For example, if we have a table of customer orders, each field within that table should only contain a single value.

Second normal form (2NF):

At this level, each non-key field within a table should be dependent on the entire primary key, rather than just a part of it. This helps to eliminate redundancy and ensure data consistency.

Third normal form (3NF):

In this level, we ensure that each non-key field is dependent only on the primary key, and not on any other non-key field within the same table. This helps to further reduce redundancy and improve data organization.

Fourth normal form (4NF):

This level of normalization involves eliminating multi-valued dependencies, where one non-key field is dependent on multiple other non-key fields. By breaking down such dependencies, we can create smaller, more focused tables that are easier to manage.

Fifth normal form (5NF):

This is the most advanced level of normalization, where we break down complex relationships between tables into smaller, more manageable tables. This helps to ensure that data is organized in the most efficient and effective way possible.

What is De-normalization?

De-normalization is the process of intentionally introducing redundancies into a database schema to improve query performance or simplify data access. In a normalized database, data is organized in a way that eliminates redundant data, which can be beneficial for data integrity and consistency. However, de-normalization involves adding redundancies back into the schema to optimize query performance.

Benefits of De-normalization

There are several benefits to de-normalization, including:

- Improved query performance: By adding redundancies back into the schema, de-normalization can help to improve query performance. This is because it reduces the number of joins required to retrieve the necessary data.

- Simplified data access: De-normalization can make it easier to access data, especially in complex queries that involve multiple tables.

- Reduced data complexity: By simplifying the schema and reducing the number of tables involved in a query, de-normalization can help to reduce the overall complexity of the database.

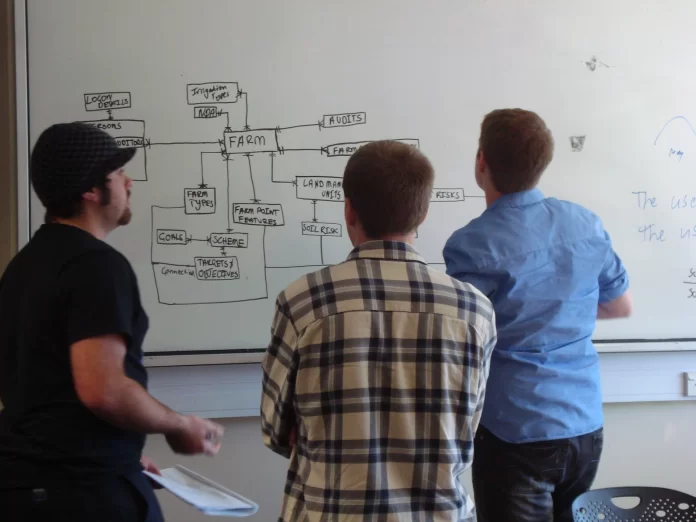

Creating a Conceptual Data Model

To create a conceptual data model, there are a few key steps to follow. The first step is to identify the entities that you will be working with. Entities are the “things” in your system that you need to track and manage. For example, if you’re working on a data model for a retail store, your entities might include customers, products, orders, and inventory.

Once you have identified your entities, the next step is to define the relationships between them. Relationships describe how the entities interact with each other. For example, in our retail store example, a customer might place an order for a product, which would reduce the inventory of that product.

Defining relationships is a critical part of the data modeling process because it helps ensure that your data is organized in a way that makes sense. It also helps ensure that you’re capturing all of the necessary information to effectively manage your data.

The final step in creating a conceptual data model is to create a data dictionary. A data dictionary is a comprehensive list of all of the data elements in your model. It includes information such as the data element name, its definition, and any associated business rules or constraints.

The data dictionary is an essential part of your data model because it provides a common language for all stakeholders involved in the project. It ensures that everyone is on the same page regarding the data elements, their definitions, and their relationships.

FAQ

What is the importance of data modeling in database design?

Data modeling is a crucial step in designing a database. It involves creating a conceptual representation of the data that will be stored in the database.

Here are the three most important things to know about the importance of data modeling:

-It helps in understanding the data: Data modeling helps to understand the data and its relationships better. It helps to identify the entities, attributes, and relationships between the data, which is essential to designing an effective database. By creating a data model, you can easily identify the data that needs to be stored and how it should be organized in the database.

-It reduces data redundancy: Data modeling helps to reduce data redundancy. By creating a data model, you can identify duplicate data and eliminate it, thus reducing the amount of storage space required for the database. This also helps to improve data consistency and accuracy.

-It improves data integrity: Data modeling helps to ensure data integrity. By identifying the relationships between the data, you can ensure that the data is consistent and accurate. This helps to improve the quality of the data stored in the database.

How is data modeling different from database design?

Data modeling and database design are related, but they serve different purposes. Data modeling is the process of creating a conceptual representation of the data that will be stored in the database. Database design, on the other hand, is the process of creating the actual database based on the data model. Here are the three most important things to know about the difference between data modeling and database design:

Data modeling precedes database design: Data modeling precedes database design. The data model is created first, and then the database is designed based on the data model.

What are the best practices to follow in data modeling?

Here are the three most important things to know about the best practices to follow in data modeling:

Understand the business requirements first, to determine what entities, attributes, and relationships need to be included in the data model.

Use standard naming conventions to ensure consistency and ease of understanding and maintenance.

Normalize the data to break it down into smaller, more manageable units. This helps to reduce data redundancy and improve data consistency and accuracy.

What are the most commonly used tools for data modeling?

Multiple data modeling tools are available, each with unique strengths and weaknesses. The following are the top three takeaways concerning the most commonly used tools:

ER/Studio: ER/Studio is a popular data modeling tool that allows you to create and manage data models. It includes features such as reverse engineering, forward engineering, and reporting.

Microsoft Visio: Microsoft Visio is a remarkably versatile and adaptable software that can be employed for a plethora of diagramming purposes, including but not limited to data modeling. Whether it’s for brainstorming ideas, creating flowcharts, or designing process maps, Microsoft Visio is a powerful tool that can help you effectively communicate and visualize your concepts with ease.

Oracle SQL Developer Data Modeler: The Oracle SQL Developer Data Modeler is an exceptional and free data modeling tool that offers a visual interface for the purpose of crafting and handling data models. The application’s intuitive and user-friendly interface facilitates the creation and management of data models with unparalleled ease and dexterity. It includes features such as reverse engineering, forward engineering, and version control.

IBM InfoSphere Data Architect: IBM InfoSphere Data Architect is a comprehensive data modeling tool that includes features such as data profiling, data lineage, and impact analysis.

Toad Data Modeler: Toad Data Modeler is a popular data modeling tool that includes features such as reverse engineering, forward engineering, and collaboration tools. It supports a wide range of database management systems, making it a versatile choice for data modeling.