Discover the essential best practices for ADF pipelines to streamline your data workflow in Azure. Learn how to optimize your data integration and transformation process effectively.

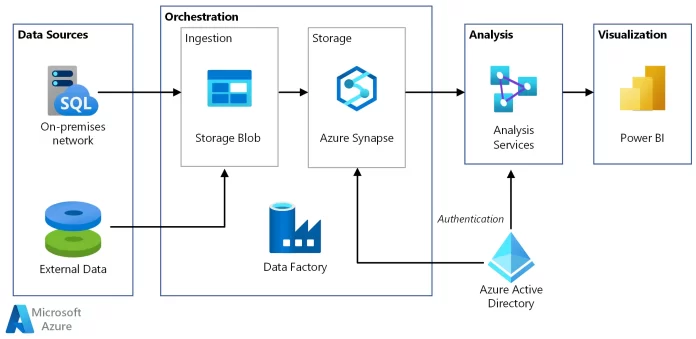

Azure Data Factory (ADF) is a powerful tool for managing and orchestrating data workflows within the Azure ecosystem. Whether you’re migrating data, transforming data, or building complex ETL processes, ADF pipelines offer the flexibility and scalability you need.

This comprehensive guide covers various aspects of pipeline creation, optimization, and maintenance. The practices we’ve outlined are valuable for ensuring smooth data integration processes and maximizing the efficiency of your pipelines.

Table of Contents

10 Best Practices for ADF Pipelines

ADF pipelines are the building blocks that allow you to define, schedule, and automate the movement and transformation of data. Each pipeline consists of activities that define the specific tasks to be executed, such as copying data from a source to a destination, transforming data, and performing data quality checks. To ensure the success of your data projects, it’s crucial to follow best practices throughout the entire pipeline lifecycle.

1. Clear Pipeline Structure

When it comes to creating efficient and effective Azure Data Factory (ADF) pipelines, having a clear pipeline structure is an absolute must. A well-organized pipeline layout can make a significant difference in how smoothly your data integration process runs.

Why Does Structure Matter?

Imagine building a complex puzzle without the picture on the box as a reference. That’s what it’s like without a clear pipeline structure. Your activities, dependencies, and flow can become a tangled mess, making it difficult to troubleshoot issues and understand the overall process.

The Benefits of Clarity

- Easy Navigation: A structured pipeline allows you to quickly locate activities, datasets, and connections. This makes it simpler to find and fix errors.

- Efficient Debugging: When issues arise, a clear structure helps you identify the source of the problem faster. This minimizes downtime and keeps your data flowing smoothly.

- Scalability: As your data integration needs grow, a well-structured pipeline can be easily expanded without causing confusion.

Tips for Creating a Clear Pipeline Structure

- Logical Grouping: Group-related activities together. For example, if you’re dealing with customer data, group extraction, transformation, and loading (ETL) activities for that data in a single section.

- Naming Conventions: Use meaningful names for activities, datasets, and pipelines. This makes it obvious what each component does.

- Visual Representation: Utilize visualization tools to map out your pipeline structure. Tools like diagrams can provide a clear overview of the entire process.

- Comments and Annotations: Within your pipeline’s code, add comments or annotations to explain the purpose of complex activities or sections.

- Consistent Flow: Arrange your activities in a logical sequence. This makes it easier for anyone to follow the data flow.

2. Parameterization is Key

Utilize parameterization for flexibility. This allows you to dynamically change values without altering the pipeline’s core structure. Imagine having the power to alter values without tearing down and rebuilding the entire pipeline – that’s the magic of parameterization.

Understanding Parameterization in ADF Pipelines

Parameterization in ADF pipelines involves the practice of using parameters to externalize values that are subject to change, enabling configuration adjustments without modifying the underlying pipeline design. This not only enhances the reusability of pipelines but also streamlines maintenance and empowers organizations to swiftly respond to shifting business requirements.

The Flexibility Advantage

Imagine a scenario where you have an ADF pipeline responsible for transferring data from an on-premises database to a cloud-based data warehouse. Without parameterization, any modifications to the source or destination database credentials, table names, or query conditions would demand direct alterations to the pipeline itself. This could be time-consuming, error-prone, and detrimental to the pipeline’s stability.

By embracing parameterization, you can abstract these variable components into parameters. This means you can alter the source or destination details without touching the core pipeline structure. Whether it’s adjusting connection strings, changing file paths, or modifying filter conditions, parameterization offers unparalleled flexibility that significantly reduces the risk of human errors and accelerates deployment cycles.

Implementing Parameterization: Step-by-Step Guide

Let’s delve into the practical steps to implement parameterization within your ADF pipelines:

Step 1: Identify Variable Elements

Begin by identifying the elements within your pipeline that are prone to change. These could encompass connection strings, file paths, table names, or even SQL queries.

Step 2: Define Parameters

For each variable element identified, define a corresponding parameter in your pipeline. Parameters are akin to placeholders that will be replaced with actual values at runtime.

Step 3: Integrate Parameters

Now, integrate these parameters into the relevant sections of your pipeline activities. This involves referencing the parameters within your source and destination settings, SQL queries, and other configurable attributes.

Step 4: Externalize Parameter Values

Parameter values can be externalized in various ways, such as through Azure Key Vault, pipeline runtime parameters, or even configuration files. Opt for the method that aligns best with your security and operational needs.

Step 5: Test and Validate

Before deploying your parameterized pipeline to a production environment, conduct rigorous testing using different parameter values. This ensures that the pipeline behaves as expected under various scenarios.

Step 6: Monitor and Maintain

Post-deployment, monitor your parameterized pipelines regularly. As business requirements evolve, you can now adjust parameter values without disrupting the pipeline’s core functionality.

The Impact of Parameterization on Performance

Parameterization not only contributes to enhanced flexibility and ease of maintenance but also influences the overall performance of your ADF pipelines.

Resource Optimization

In scenarios where you have multiple pipelines performing similar tasks but with distinct sources or destinations, parameterization enables you to create a single pipeline template that accepts different parameters. This reduces the need for redundant pipeline definitions and optimizes resource utilization.

Efficient Troubleshooting

When an issue arises within your pipeline, parameterization expedites the troubleshooting process. Since each parameter corresponds to a specific aspect of the pipeline, identifying and rectifying problems becomes more straightforward and precise.

3. Incremental Loading

When dealing with large datasets, opt for incremental loading. This means only new or changed data is transferred, reducing processing time and resources. It’s all about transferring only what’s changed, reducing processing time, and optimizing resource utilization.

The Efficiency of Incremental Loading

Imagine you’re working with a colossal dataset. Instead of moving the entire dataset each time, incremental loading only transfers the new or modified data. This not only saves precious bandwidth but also minimizes the strain on your systems.

The Steps to Success

- Identification: Begin by identifying what constitutes new or modified data. Timestamps, version numbers, or unique identifiers can serve as markers.

- Extract and Compare: During each run, extract the source data and compare it with the previously loaded data. This is where the magic happens – only the differences are selected.

- Merge or Update: Integrate the selected differences into the destination dataset. Depending on the scenario, you might update existing records or append new ones.

The Advantages of Incremental Loading

- Speedy Updates: Incremental loading drastically reduces processing time. With smaller data chunks, transfers are faster, and updates are quicker.

- Less Resource Strain: Since you’re moving less data, your system’s resources are utilized more efficiently. This can lead to a more stable and responsive environment.

- Historical Tracking: Incremental loading maintains a trail of changes over time, enabling historical analysis and comparisons.

When to Choose Incremental Loading

- Frequent Updates: If your data source is frequently updated, incremental loading prevents redundant transfers.

- Large Datasets: Handling large datasets becomes manageable, as only changes need to be processed.

- Real-time Demands: For applications requiring real-time or near-real-time updates, incremental loading keeps you in sync without overloading the system.

4. Error Handling and Monitoring

Implement robust error handling. Set up alerts and monitoring to quickly detect and address any failures in your pipeline.

The Need for Error Handling

Picture this: your data transformation activity hits a snag due to unexpected input. Without error handling, this glitch could cascade, causing chaos downstream. Error handling acts as a safety net, preventing hiccups from turning into disasters.

The Power of Monitoring

Monitoring, on the other hand, is like having a bird’s-eye view of the data landscape. It lets you track the progress of your pipelines, detect anomalies, and intervene before minor issues evolve into full-blown problems.

Crafting a Robust Strategy

- Predictive Alerts: Set up alerts that predict potential issues before they happen. This proactive approach can save you from last-minute fire drills.

- Detailed Logging: Log detailed information about each step of your pipeline. This is like leaving breadcrumbs to trace back and identify the root cause of any issue.

- Custom Notifications: Configure notifications to reach you via email, messages, or dedicated monitoring tools. Stay informed without constant manual checks.

- Conditional Flows: Design your pipeline with conditional logic that guides data through different paths based on certain conditions. This keeps the process on track, even when issues arise.

The Benefits Are Clear

- Minimized Downtime: Swift error detection and handling minimize downtime, ensuring data keeps flowing smoothly.

- Data Integrity: Robust error handling maintains the integrity of your data, preventing corruption and loss.

- Operational Efficiency: Monitoring and handling errors efficiently reduce manual interventions and improve resource utilization.

When the Unexpected Strikes

- Stay Calm: Errors are part of the journey. When they arise, stay calm and methodically follow your error-handling playbook.

- Learn and Adapt: Each error is a lesson. Use them to fine-tune your error-handling strategy for even better future performance.

5. Security Measures

Prioritize security. Use managed identities and secure your data with encryption both at rest and in transit. Implementing robust security measures is not just a choice; it’s a responsibility that ensures your data remains confidential, integral, and available.

The Imperative of Security

Imagine your data as a treasure chest. Without proper security measures, it becomes vulnerable to breaches, leaks, and unauthorized access. Security isn’t just a feature; it’s the foundation on which trust is built.

Layers of Protection

- Authentication and Authorization: Utilize strong authentication methods to verify the identities of users and applications accessing your pipeline. Apply granular authorization to ensure they can only access what’s necessary.

- Encryption: Encrypt your data at rest and during transit. This shields your data from prying eyes, whether it’s stored in databases or traveling through networks.

- Managed Identities: Leverage managed identities to ensure secure access to resources without exposing sensitive credentials.

Principles of Defense

- Least Privilege: Grant the minimum necessary permissions. Limiting access reduces the potential impact of any breach.

- Audit Trails: Maintain comprehensive audit logs. These logs act as a forensic trail to investigate and understand any security incidents.

- Regular Updates: Keep your pipeline components updated with the latest security patches. Outdated software can become a gateway for attackers.

Constant Vigilance

- Security Testing: Regularly conduct security assessments and penetration testing. Uncover vulnerabilities before they’re exploited.

- Monitoring and Alerts: Set up real-time monitoring and alerts to detect suspicious activities. Early detection can prevent potential breaches.

Adhere to industry-specific compliance standards and regulations when handling sensitive data. Ensure that your pipeline processes align with data protection regulations such as GDPR or HIPAA.

Embracing Security Culture

Security isn’t just a checklist; it’s a culture. Educate your team about security practices, encourage them to report any anomalies, and create an environment where security is everyone’s responsibility.

6. Resource Management

Optimize resource allocation. Properly size your infrastructure to avoid overutilization or underutilization of resources. Efficient resource management ensures smooth operations, prevents wastage, and keeps your data integration endeavors in harmony.

Optimization at Its Core

- Right Sizing: Allocate resources based on your pipeline’s needs. Overprovisioning leads to unnecessary costs, while underprovisioning can hinder performance.

- Auto-scaling: Implement auto-scaling mechanisms to dynamically adjust resource allocation based on workload demands.

- Shared Resources: Utilize shared resources where possible. This optimizes utilization and reduces the need for excessive provisioning.

Strategic Allocation

- Prioritize Critical Tasks: Assign more resources to critical tasks to ensure they are completed swiftly, while less critical tasks can make do with fewer resources.

- Balanced Distribution: Distribute resources evenly across pipelines to prevent bottlenecks and ensure fair access.

- Time-based Allocation: Adjust resource allocation based on the time of day or peak usage hours. This prevents resource congestion during high-demand periods.

Monitoring and Tuning

- Continuous Monitoring: Regularly monitor resource utilization and performance metrics. This allows you to spot inefficiencies and make necessary adjustments.

- Performance Tuning: Fine-tune your resource allocation based on observed performance patterns. This iterative process optimizes efficiency over time.

The Impact of Effective Resource Management

- Cost Savings: Properly allocated resources prevent unnecessary spending on overprovisioning.

- Enhanced Performance: Resources are where the magic happens. Well-managed resources ensure smooth and efficient data movement.

- Scalability: Efficient resource allocation paves the way for seamless scaling as your data integration needs grow.

7. Testing and Validation

Before deploying pipelines, thoroughly test and validate them in a non-production environment. This reduces the risk of errors affecting live data. Just as a bridge undergoes stress tests before carrying its first load, your pipelines must be rigorously tested and validated to ensure they can withstand the data flow.

The Importance of Testing

Imagine launching a ship without ensuring it can navigate the waters. Similarly, releasing pipelines into production without testing could lead to unforeseen issues, data corruption, and downtime.

The Art of Validation

Validation is like meticulously inspecting the ship’s hull for any leaks before setting sail. It’s about ensuring that data is accurate, complete, and conforms to expectations.

Types of Testing and Validation

- Unit Testing: Test individual pipeline components and activities. Ensure each part works correctly before integrating them into the larger system.

- Integration Testing: Assess the interactions between different components to identify any compatibility issues.

- Data Validation: Verify that data is accurately transformed, loaded, and processed according to predefined rules.

- Error Handling Testing: Simulate error scenarios to ensure your pipeline responds correctly and doesn’t break under pressure.

Setting Up a Testing Environment

- Non-Production Environment: Create a dedicated environment for testing. This prevents any potential issues from affecting your live data.

- Realistic Data: Use real-world data scenarios to mimic actual production conditions. This helps identify potential bottlenecks and performance concerns.

The Validation Dance

- Data Profiling: Understand your data’s structure and characteristics. Profiling reveals insights crucial for successful validation.

- Validation Rules: Define clear validation rules based on business logic and data requirements.

- Automated Validation: Implement automated validation processes to streamline the testing phase and catch issues early.

The Benefits Are Clear

- Reduced Risks: Rigorous testing minimizes the risk of errors and issues in your pipeline.

- Confidence in Deployment: Thoroughly tested pipelines can be deployed with confidence, knowing they’ve undergone rigorous scrutiny.

- Cost Savings: Addressing issues early in the testing phase is more cost-effective than fixing them after deployment.

8. Documentation

Maintain comprehensive documentation. Describe your pipeline’s purpose, structure, and any specific configurations for future reference. Comprehensive documentation is not just a record; it’s the compass that helps you navigate the intricate pathways of your pipelines.

Crafting a Documentation Masterpiece

- Clear Purpose: Begin with a succinct overview of your pipeline’s purpose and objectives. This sets the stage for what’s to come.

- Architecture Overview: Provide a high-level view of your pipeline’s architecture. This helps readers grasp the overall structure.

- Activity Descriptions: Detail each activity’s function, inputs, outputs, and transformations. Think of this as introducing the characters in your story.

Key Components

- Data Sources and Destinations: Explain where your data comes from and where it’s headed. Include connection details and any transformation logic.

- Dependencies and Scheduling: Outline dependencies between activities and scheduling patterns. This ensures a smooth flow of data.

- Error Handling: Describe how your pipeline handles errors and failures. This prepares users for unexpected scenarios.

Documenting with Visuals

- Diagrams: Utilize diagrams to visually represent the pipeline’s flow. A picture is worth a thousand words, especially in complex scenarios.

- Flowcharts: Create flowcharts illustrating the sequence of activities, decision points, and data paths.

Maintaining and Sharing

- Versioning: Just as software is versioned, so should your documentation. Keep track of changes and updates.

- Collaboration: Enable collaboration by storing your documentation in a shared repository. This fosters teamwork and knowledge sharing.

The Value of Documentation

- Knowledge Transfer: Documentation ensures that your hard-earned knowledge isn’t lost when team members change or when time passes.

- Troubleshooting: When issues arise, documentation becomes your troubleshooting companion, providing insights into the pipeline’s inner workings.

- Efficiency: A well-documented pipeline allows users to understand and utilize it efficiently, reducing the learning curve.

9. Regular Updates

Stay up-to-date with ADF’s latest features and updates. Incorporating new functionalities can enhance the performance of your pipelines.

Regular updates keep your pipelines agile, efficient, and aligned with the ever-evolving landscape of data integration.

The Need for Continuous Evolution

Imagine a garden left untended – over time, it becomes overgrown and loses its vitality. Similarly, pipelines that aren’t regularly updated can become cumbersome and outdated.

Embracing Innovation

- Feature Integration: Keep an eye on ADF updates and new features. Incorporate relevant ones to enhance the capabilities of your pipelines.

- Performance Optimization: Regular updates can bring performance enhancements and optimizations, leading to faster and more efficient data processing.

Maintenance and Bug Fixes

- Bug Squashing: New updates often come with bug fixes. Regular updates ensure that your pipelines remain free from glitches and inconsistencies.

- Security Patches: Stay protected by applying security patches promptly. Cyber threats are ever-present, and regular updates strengthen your defense.

The Update Strategy

- Scheduled Updates: Set up a schedule for updating your pipelines. This prevents updates from disrupting critical processes.

- Testing Ground: Before deploying updates to production, test them in a controlled environment to identify any potential issues.

Monitoring the Impact

- Performance Monitoring: After updates, closely monitor performance to ensure that improvements are realized and any adverse effects are promptly addressed.

- Feedback Loop: Encourage users to provide feedback on how updates have impacted their experience. This insight can guide future update decisions.

The Advantages Are Clear

- Competitive Edge: Regularly updated pipelines can give you a competitive edge by harnessing the latest technologies and techniques.

- Adaptability: Evolving pipelines can adapt to changing data requirements, ensuring they remain relevant and effective.

- Sustainability: Continual updates prolong the lifespan of your pipelines, postponing the need for major overhauls.

10. Performance Tuning

Regularly review and fine-tune your pipeline’s performance. Identify bottlenecks and optimize data flow for maximum efficiency.

The Symphony of Optimization

- Data Volume: Consider the scale of your data. Adjust your pipeline’s configuration to handle large volumes without bogging down.

- Parallel Processing: Design your pipeline to execute tasks concurrently, distributing the workload and accelerating processing.

Delicate Balancing Act

- Data Compression: Utilize compression techniques to reduce the size of data being transferred. This boosts speed and conserves resources.

- Memory Management: Optimize memory allocation to prevent resource bottlenecks and ensure efficient data processing.

Observing and Adapting

- Monitoring: Continuously monitor the performance of your pipeline. Identify bottlenecks, slow points, and areas for improvement.

- Benchmarking: Establish performance benchmarks to gauge your pipeline’s efficiency and compare against desired outcomes.

Profiling and Analysis

- Data Profiling: Examine your data’s characteristics to determine where optimizations are needed. This helps in making informed tuning decisions.

- Execution Plans: Analyze execution plans to understand the sequence of activities and pinpoint areas that require attention.

Continuous Refinement

- Iterative Approach: Performance tuning isn’t a one-time task. It’s an ongoing process that requires constant refinement and adjustments.

- Testing Ground: Test tuning changes in a controlled environment before deploying them to production to ensure they have the desired impact.

By adhering to these best practices, you can ensure your ADF pipelines are not only functional but also optimized for top-notch performance. Keep evolving your approach to stay ahead in the dynamic world of data integration.